Real-Time LiDAR Point-Cloud Moving Object Segmentation for Autonomous Driving.

Xie, Xing, Haowen Wei, and Yongjie Yang

Duration: March 2021 – September 2022

Role: Lead Software Engineer

Overview

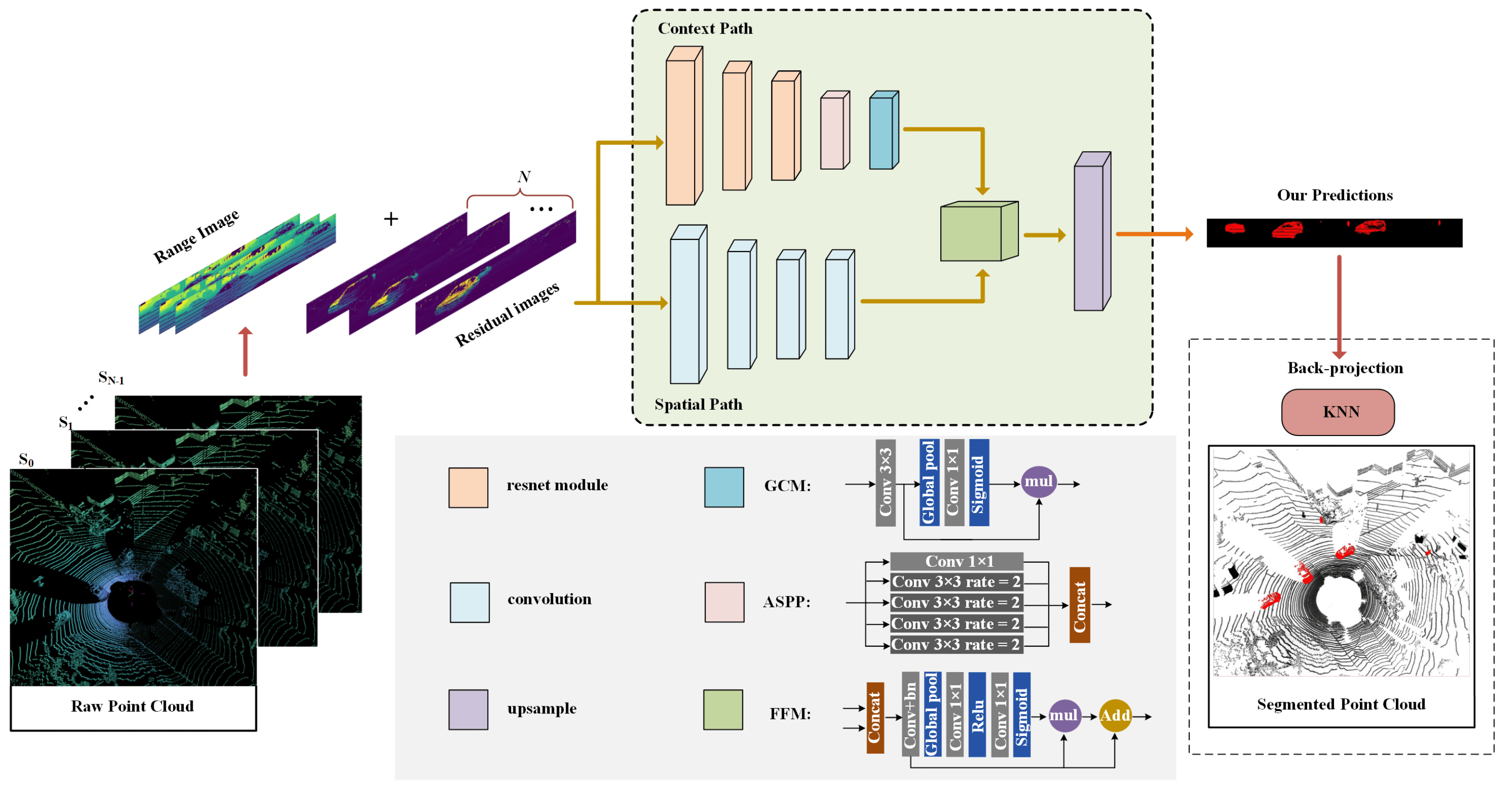

This paper proposes a lightweight convolutional neural network (CNN) architecture for LiDAR point-cloud moving object segmentation in real-time autonomous driving applications. The network reduces computational complexity by utilizing 66% fewer parameters than current state-of-the-art models while maintaining high processing speeds on both GPU and FPGA platforms. Achieving an IoU score of 51.3% on the SemanticKITTI dataset and processing 32 frames per second (fps) on FPGA, this approach is optimized for the real-time requirements of autonomous vehicles.

My Contributions

- Network Design: Designed and implemented the lightweight CNN architecture for efficient segmentation of moving and static objects in LiDAR point clouds.

- Optimization: Optimized the system for real-time processing on both NVIDIA RTX 3090 GPU and FPGA platforms, achieving 32 fps.

- Benchmarking: Evaluated the model’s performance on the SemanticKITTI dataset, achieving a competitive 51.3% IoU score for moving object segmentation.

Contributions of the Paper

- Efficient Segmentation: Introduces a lightweight CNN that significantly reduces computational burden with 66% fewer parameters compared to existing models, facilitating real-time performance on resource-constrained hardware.

- Real-Time Performance: Demonstrates real-time processing capabilities on both GPU and FPGA platforms, achieving the required 32 fps for autonomous driving.

- Benchmark Results: Provides thorough evaluation results on the SemanticKITTI dataset, setting a new standard for moving object segmentation in LiDAR data.

Novelty

- Developed a lightweight CNN architecture that balances high accuracy with reduced computational overhead, making real-time moving-object segmentation feasible on embedded systems for autonomous driving applications.